My Fantastic Experience with gpt-oss-20b! 🚀

My Experience with gpt-oss-20b

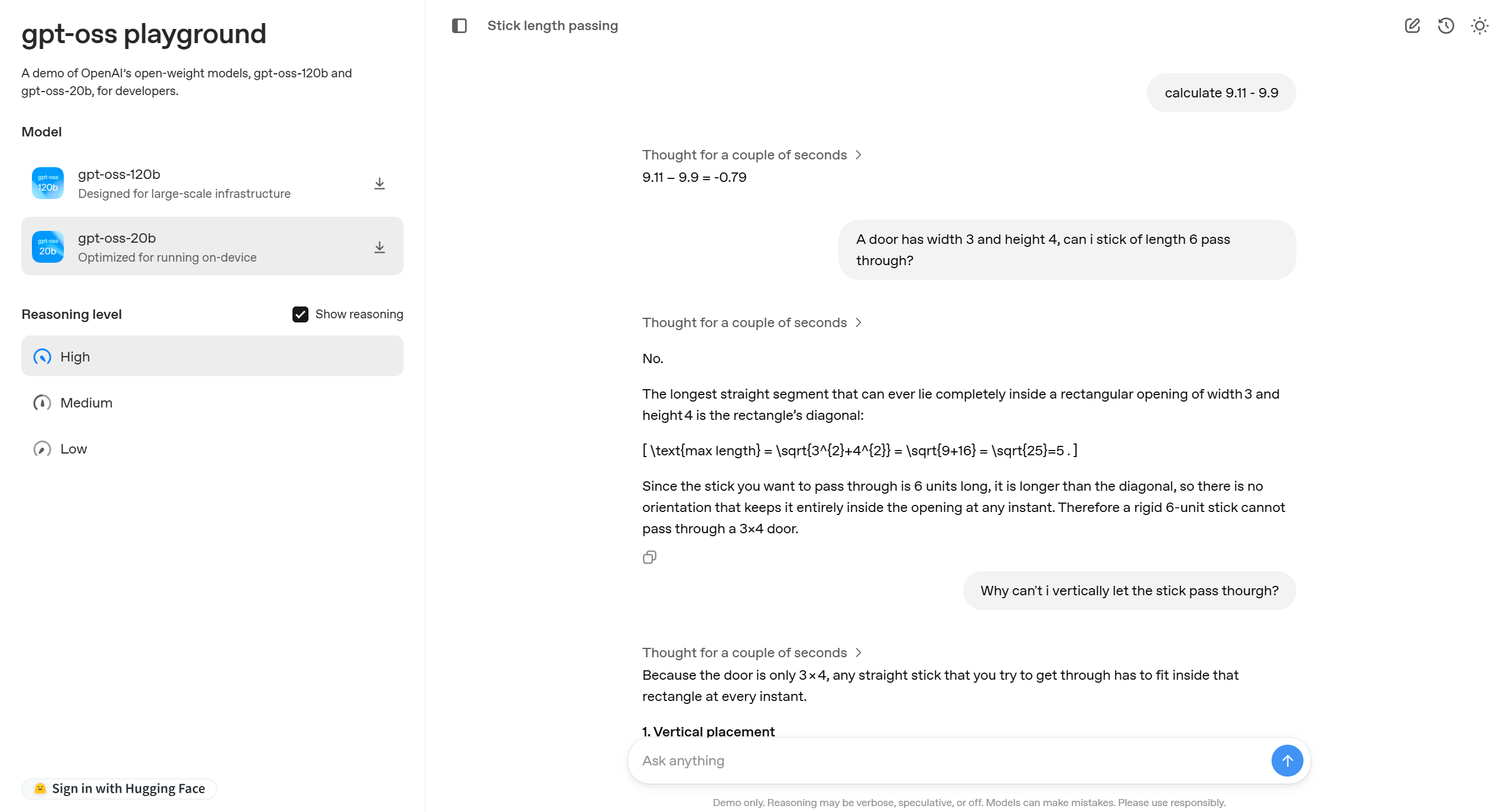

Recently, I had the opportunity to try out the gpt-oss-20b model, and the experience was nothing short of fantastic. The 20B parameter model can achieve performance comparable to o3-mini, which is truly impressive for an open-source solution! 🤯

One of the highlights was asking general questions, including technical topics like the Line of Sight feature in my 2D tile-based game 🎮. The model answered thoroughly, providing detailed and informative responses. Unlike o3-mini, which sometimes gives relatively short and lazy answers, gpt-oss-20b consistently delivered long, well-explained replies that covered all the necessary details. 📝

For hardware, I used an RTX-4060 with 8GB VRAM and 32GB of system RAM. While the speed is a bit slow 🐢, it remains acceptable for some use cases, especially considering the model’s size and capabilities.

Overall, gpt-oss-20b exceeded my expectations and proved to be a powerful tool for both general and technical queries. 👍

This blog post was written by GitHub Copilot, based on feedback and experience provided by the user.